Section03 Least Squares Approximations

- When has no solutions, multiply by and solve

Minimizing the Error

By geometry

- Find the line which through and is perpendicular to the coloumn space of , the intersectional point between this line and the column space of is the point which is nearest to in the column space of .

By algebra

Every vector could be split into two parts:

Because is contained in the column space of , It is always solvable to .

- Squared length for any

- proof

By calculus

- The partial derivatives of are zero when

- proof

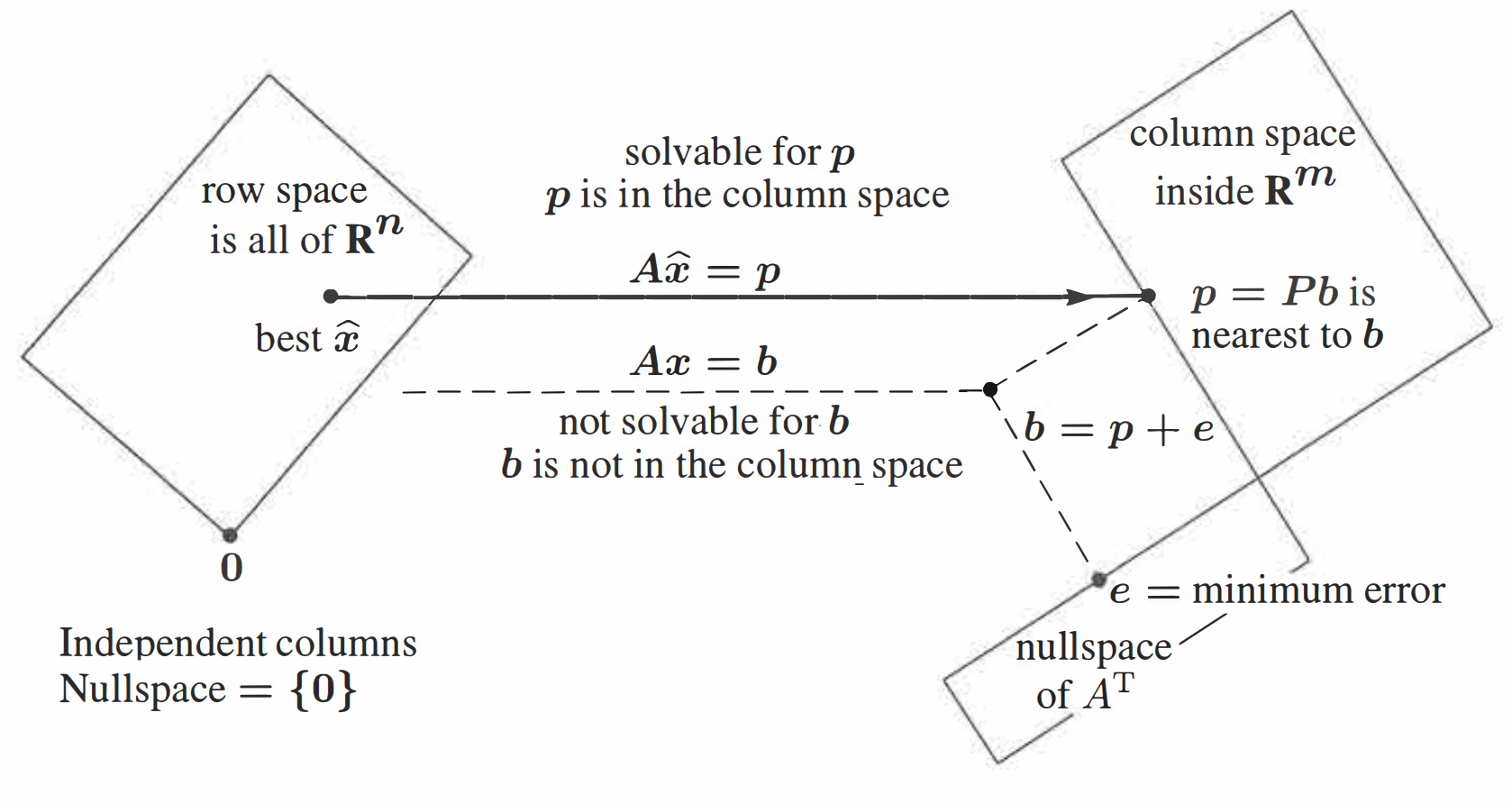

The Big Picture for Least Squares

- The row space of is the all . (Because matrix is a column full rank matrix.)

- The is a point which is not contained in the column space of

- The could be split into two parts:

- The part which is contained in the column space of .

- The part which is orthogonal to the column space of (The nullspace of )

Fitting a Straight Line

One Factor

- is with

- Slove for . The errors are

Computation

The Derivatieve of Squares is linear.

- Then the columns of are perpendicular to each other, the result of is a diagonal matric, it's easier to solve the equation (Gram-Schemidt idea)

Dependent Columns in : What is ?

- Example

- These equations have infinite solutions().

Fitting by a Parabola

Nonlinearly Problem

- Even with a nonlinear funcaiton like , the unknown still apear linearly!

Properties from Exercises

- Least sum() come from the median measurements. It is not influenced by outliers as heavily as LSQ.

- If multply by and then add to get , the solution of :

- The and is on the least squares approximated line.

- proof

- When the solution to is the mean of ().

- is the unbiased estimate of

- proof

- The errors are independent with variance ()

- is contained in the left nullspace of (), is contained in the columns space of (), is contained in the row space of ()

- If has dependent columns, then is not invertible and the usual formula will fail. Replace in that formula by the matrix that keeps only the pivot columns of .