How to Assessing Model Accuracy ?

The Quality of Fit

training MSE

- computed using the training data

- LESS IMPORTANT!

test MSE

- is the observations which is not used to training model. Test Observations

- MORE IMPORTANT!

- How to get test observations?

- cross-validation (Chapter 05)

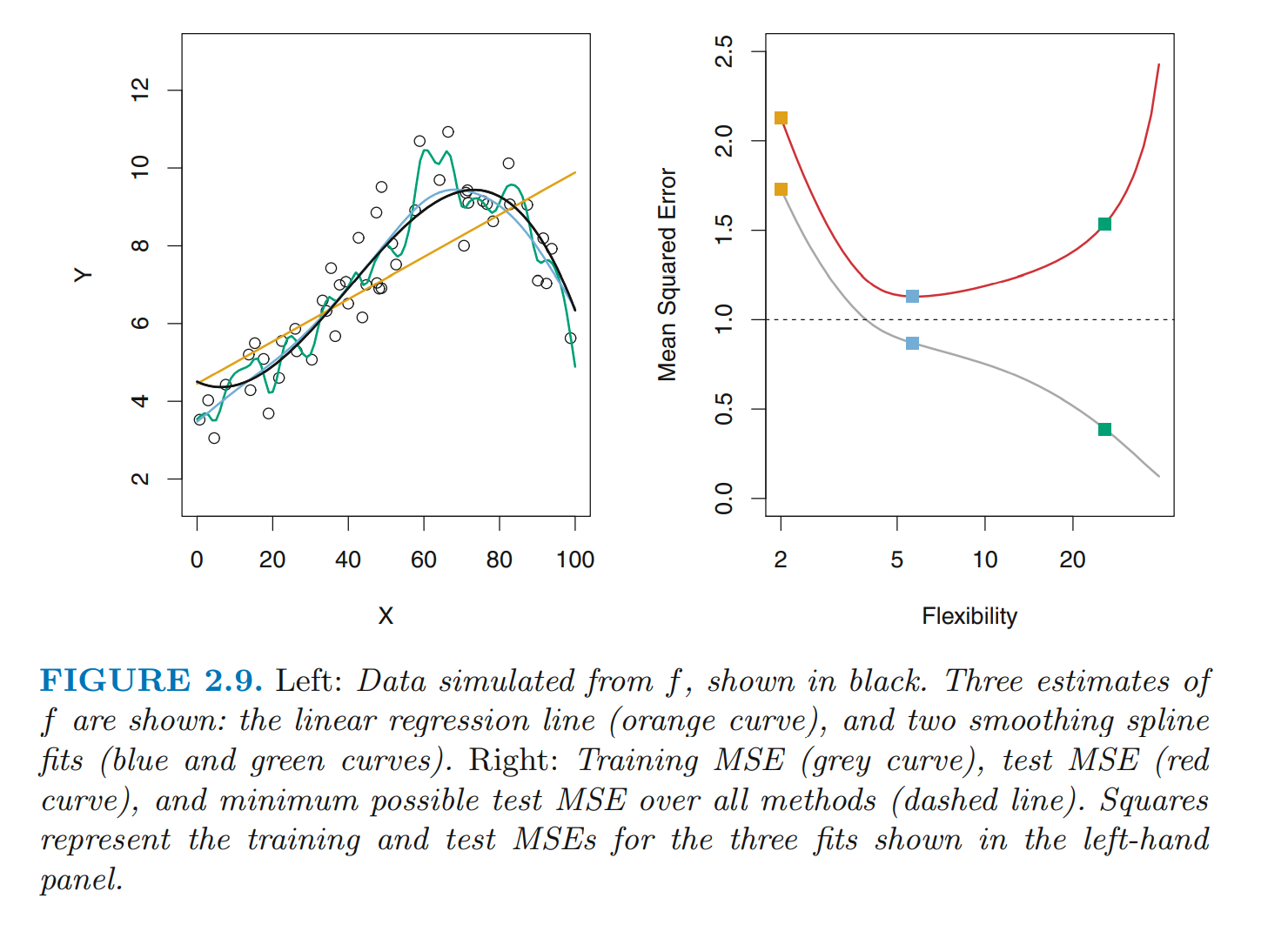

training MSE v.s. test MSE

- model more flexible (degree of freedom increase)

- training MSE decrease

- test MSE may decrease firstly, then become increase

- overfitting

- when a model yields small training MSE but a large test MSE

- as a result of model learning some patterns caused by random error.

The Bias-Variance Trade-Off

variance

- the error caused by different training data

- the more flexible model (higher degree of freedom) has larger variance

bias

- the error caused by the simplify model

- the more flexible model (higher degree of freedom) has smaller bias

The Classification Setting

The Quality of Fit

error rate

training error rate

test error rate

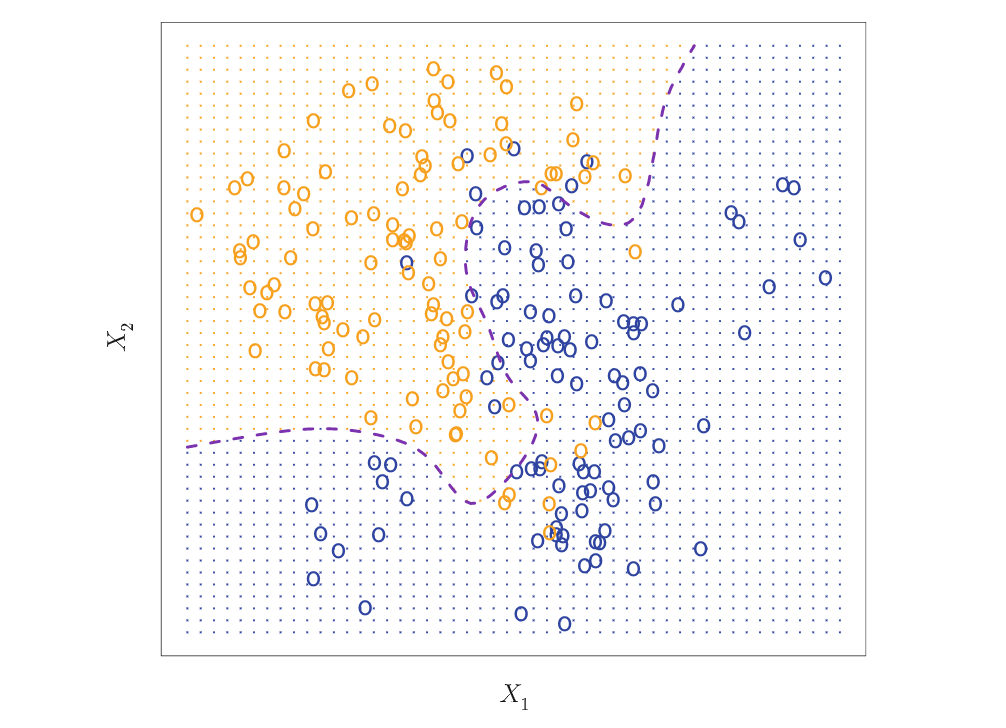

The Bayes Classifier

when given the observed predictor vector

the probability that

Idea Situation

two-class example

- class 1 and class 2

Bayes decision boundary

- the points where the probability is exactly 0.5

- determined the Bayes classifier's prediction

the purple dashed line

Bayes error rate

- The lowest possible test error rate produced by Bayes classifier.

- Analogous to irreducible error

K-Nearest Neighbors

test observation

the number of points which be selected near the

- Border less flexible

- high variance, low bias

- Border more flexible

- high bias, low variance

the selected training data ( points)